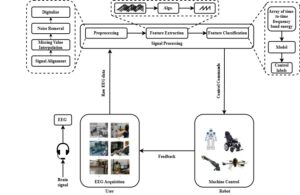

Brain-Computer Interface Approach for Mobile Robot Navigation

The fusion of neuroscience, robotics, and artificial intelligence through our innovative project leverages Brain-Computer Interface (BCI) technology to revolutionize human-robot interaction. Enabling direct brain-to-robot communication, this initiative transcends conventional controls, creating a seamless interface for effortless collaboration, advancing assistive technologies, and enhancing user experiences.

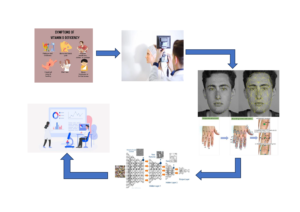

Visual Inspection Hybrid System for Vitamin-D Deficiency

Vitamin D is vital for health, but deficiencies are widespread due to poor diets, declining soil nutrients, and limited access to traditional diagnostics. Blood tests, though accurate, are invasive and costly, especially in low-resource areas. This project introduces a non-invasive, machine learning-based system to detect vitamin deficiencies via images of skin, hair, and nails, enhancing accessibility and supporting telemedicine.

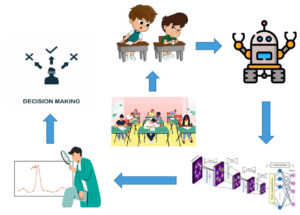

Gen AI-Enabled Robot-Student Interaction for Examination Invigilation

Examinations are key to assessing student knowledge, but traditional invigilation methods face issues like human error, bias, and fatigue. These challenges are heightened in large-scale or remote exams. Automated invigilation systems using AI and computer vision help detect malpractice in real time, improving fairness and efficiency. This technology reduces human workload, enhances security, and ensures academic integrity.

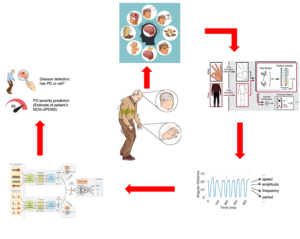

Multimodal Vision System for Mobility Assessment in Parkinson’s Elderly

Falls are a major cause of injury and death among the elderly, often leading to reduced mobility and independence. Physical activity and functional mobility are crucial for healthy ageing, and their decline negatively impacts quality of life. Parkinson’s disease, a progressive neurological disorder caused by dopamine loss, worsens mobility and independence. Though there is no cure, treatments like medication, therapy, and deep brain stimulation help manage symptoms.

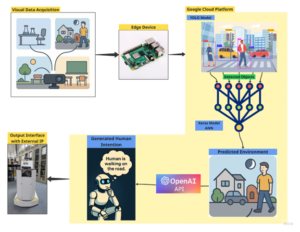

Human-Like Intention Generation Based on Visual Perception of Humanoid Robots

This research is for a method to enhance robot intelligence for environment identification using visual perception. We explore five environments: electronics lab, mechatronics lab, lecture halls, roads, and playgrounds, using Computer Vision for object detection to identify 43 distinct objects. An AI model was trained for improved accuracy in live feeds, and a Python script was developed to count objects in each environment, creating a dataset based on object occurrences. This dataset was used to train a model capable of identifying environments by object counts. Our approach demonstrates the integration of object detection and neural networks for intelligent environment identification, enhancing robotic autonomy in varied settings.

Vision-based Assistance Robot in Laboratory Environment

This study introduced a teaching assistant robot designed to enhance laboratory education in Mechatronics through increased student participation and teacher support. The robot incorporates advanced technologies such as real-time navigation, sensor and actuator detection, voice command recognition, pneumatic symbol identification, facial authentication, voice response, and environmental awareness. Its omnidirectional mobility, enabled by Mecanum wheels, allows for smooth movement, while SLAM algorithms and LiDAR mapping support effective obstacle avoidance and path planning. The robot uses ROS2 for seamless navigation in complex environments and integrates Computer Vision models for intuitive interaction. Future developments aim to enhance indoor navigation, robot localization, environmental awareness, and adaptive cruise control.

Environment-Aware Adaptive Cruise Control for Assistive Wheelchair

This research focuses on developing an Environment-Aware Adaptive Cruise Control (EA-ACC) system for assistive wheelchairs, aiming to enhance mobility, safety, and independence for individuals with limited mobility. Unlike traditional vehicle-based systems, EA-ACC integrates environmental awareness to navigate complex indoor and crowded environments. Using sensors like LiDAR and cameras, it detects and responds to dynamic obstacles with real-time speed and direction adjustments. Driven by AI and machine learning, the system continuously adapts to user needs and environments, offering a user-centered design with override and sensitivity controls for a safer and more practical mobility solution.